How to Deploy PGvector and PGadmin on Docker and Ditch Pinecone

Learn how you can install Postgres PGvector database and PGadmin on Docker Compose for your projects.

PostgreSQL is a powerful, open-source object-relational database system known for its robustness, extensibility, and compliance with SQL standards. It has been actively developed for over 35 years and is widely used for managing complex data workloads.

PGVector is an extension for PostgreSQL that enables vector similarity search, allowing you to store, query, and index vectors. This is particularly useful for applications involving high-dimensional data, such as recommendation systems, image search, and natural language processing.

PGAdmin is a web-based graphical user interface (GUI) management tool for PostgreSQL. It simplifies database administration tasks, providing a user-friendly interface for executing SQL queries, managing database objects, and monitoring database performance.

Pgvector can store embeddings generated by AI models, such as those from OpenAI. This integration allows for efficient similarity searches and retrieval-augmented generation (RAG), where embeddings help find relevant information to augment AI model responses

Pgvector transforms PostgreSQL into a powerful vector database, enabling efficient storage and querying of embeddings. This capability is essential for AI applications that rely on vector similarity, such as recommendation systems, NLP, and image search. With enhancements from pgvectorscale, PostgreSQL can now compete with specialized vector databases, offering high performance and scalability at a lower cost

If you are interested to see some free cool open source self hosted apps you can check toolhunt.net self hosted section.

How to Deploy PGvector and PGadmin on Docker

1. Prerequisites

Before you begin, make sure you have the following prerequisites in place:

- VPS where you can host PGvector, you can use one from Hetzner

- Docker and Dockge installed on your server, you can check the Dockge - Portainer Alternative for Docker Management for the full tutorial.

- CloudFlare Tunnels are configured for your VPS server, the details are in the article here I deployed Dockge

Dockge and CloudFlare tunnels are optional, they are just here to help us deploy easier the compose file and have a subdomain added to the PGAdmin so we can access it via SSL with CloudFlare Protection. They are not mandatory you can use what ever stack you like.

You can use also Traefik as a reverse proxy for your apps. I have created a full tutorial with Dockge install also to manage your containers on: How to Use Traefik as A Reverse Proxy in Docker

2. Docker Compose File

The following Docker Compose file sets up a PostgreSQL database with the PGVector extension and a PGAdmin instance for database management.

version: "3.8"

services:

db:

hostname: pgvector_db

container_name: pgvector_db_container

image: ankane/pgvector

ports:

- 5432:5432

restart: unless-stopped

environment:

- POSTGRES_DB=${POSTGRES_DB}

- POSTGRES_USER=${POSTGRES_USER}

- POSTGRES_PASSWORD=${POSTGRES_PASSWORD}

- POSTGRES_HOST_AUTH_METHOD=trust

volumes:

- ./local_pgdata:/var/lib/postgresql/data

healthcheck:

test: ["CMD-SHELL", "pg_isready -U ${POSTGRES_USER} -d ${POSTGRES_DB}"]

interval: 5s

timeout: 5s

retries: 5

pgadmin:

image: dpage/pgadmin4

container_name: pgadmin4_container

restart: unless-stopped

ports:

- 5016:80

user: "$UID:$GID"

environment:

- PGADMIN_DEFAULT_EMAIL=${PGADMIN_DEFAULT_EMAIL}

- PGADMIN_DEFAULT_PASSWORD=${PGADMIN_DEFAULT_PASSWORD}

volumes:

- ./pgadmin-data:/var/lib/pgadminExplanation of the Docker Compose File

- services: Defines the services to be run.

- db: This service sets up the PostgreSQL database with the PGVector extension.

- hostname: Sets the hostname for the container.

- container_name: Names the container for easier identification.

- image: Uses the

ankane/pgvectorimage, which includes PostgreSQL with the PGVector extension. - ports: Maps port 5432 on the host to port 5432 in the container.

- restart: Ensures the container restarts unless stopped manually.

- environment: Sets environment variables for the PostgreSQL database.

- volumes: Mounts a local directory to persist PostgreSQL data.

- healthcheck: Configures a health check to ensure the database is ready.

- pgadmin: This service sets up the PGAdmin web interface.

- image: Uses the

dpage/pgadmin4image. - container_name: Names the container for easier identification.

- restart: Ensures the container restarts unless stopped manually.

- ports: Maps port 5016 on the host to port 80 in the container.

- user: Sets the user ID and group ID for the container.

- environment: Sets environment variables for PGAdmin.

- volumes: Mounts a local directory to persist PGAdmin data.

- image: Uses the

- db: This service sets up the PostgreSQL database with the PGVector extension.

3. Environment Variables File

Create a .env file with the following content to set the necessary environment variables:

POSTGRES_USER='user'

POSTGRES_PASSWORD='pass'

POSTGRES_DB='vector'

PGADMIN_DEFAULT_PASSWORD=pgpass

[email protected]Here you can add your users and passwords, POSTGRES ones are for the database and PGADMIN are to access the UI for administrating the PGvector database.

4. Deploying PGVector and PGAdmin

To deploy the services, run the following command:

docker-compose up -dIn case you are interested to monitor server resources like CPU, memory, disk space you can check: How To Monitor Server and Docker Resources

This command will start the PostgreSQL database with PGVector and the PGAdmin web interface in detached mode.

5. Accessing the PostgreSQL Database with PGAdmin

- Open your web browser and navigate to

http://ip:5016. - Log in using the email and password specified in the

.envfile. - Register a new server in PGAdmin using the following details:

- Host name/address:

pgvector_db - Port:

5432 - Username: The value of

POSTGRES_USERfrom the.envfile. - Password: The value of

POSTGRES_PASSWORDfrom the.envfile.

- Host name/address:

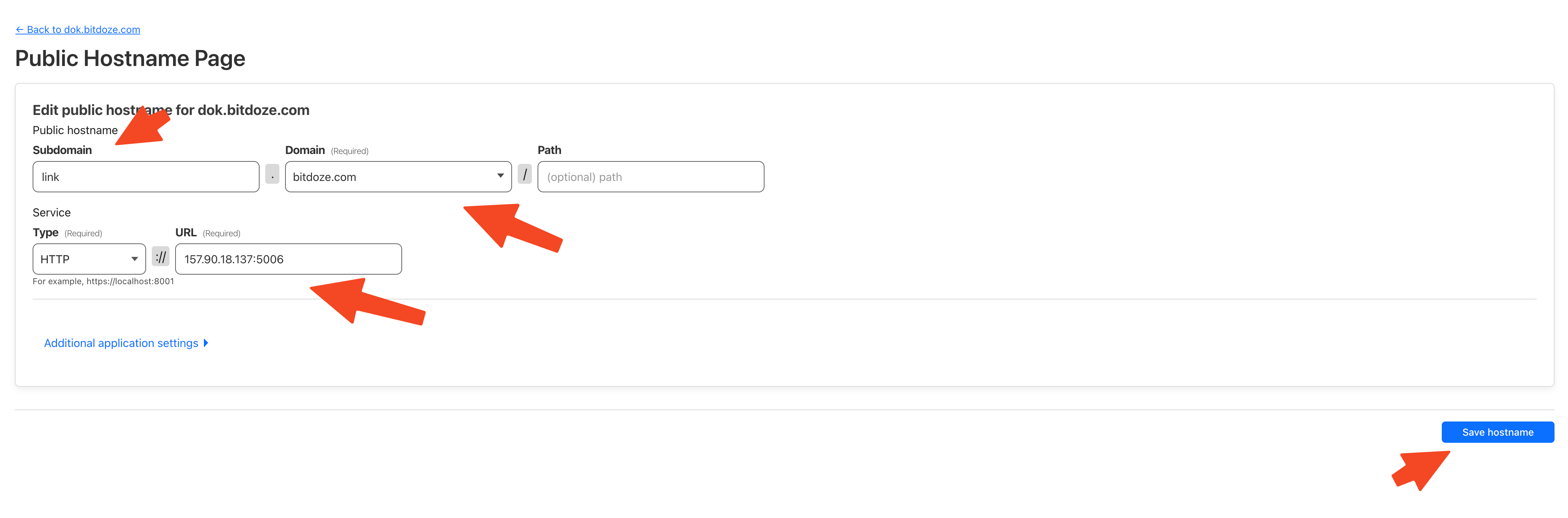

6. Configure the CloudFlare Tunnels

You need to let CloudFlare Tunel know which port to use, you just need to go in Access - Tunnels and choose the tunnel you created and add a hostname that will link a domain or subdomain and the service and port.

You can also check Setup CloudPanel as Reverse Proxy with Docker and Dokge to use CloudPanel as a reverse proxy to your Docker containers.

Conclusion

Deploying PostgreSQL with PGVector and PGAdmin using Docker simplifies the setup process and provides a powerful environment for managing high-dimensional data and performing vector similarity searches. This setup is ideal for AI-based applications and other use cases requiring efficient data management and analysis.